Building a Raspberry Pi 5 Cluster for Automated Trading

If you’re like me and you want complete control over your financial systems, value autonomy over institutional dependencies, and enjoy engineering your own tools — building a Raspberry Pi 5 cluster for automated trading is one of the most rewarding setups you can pursue. This guide is specifically for creating a portable, low-power, highly efficient trading infrastructure using C++ for execution, R for signal modeling, SQL for data management, and Ubuntu Server for maximum performance.

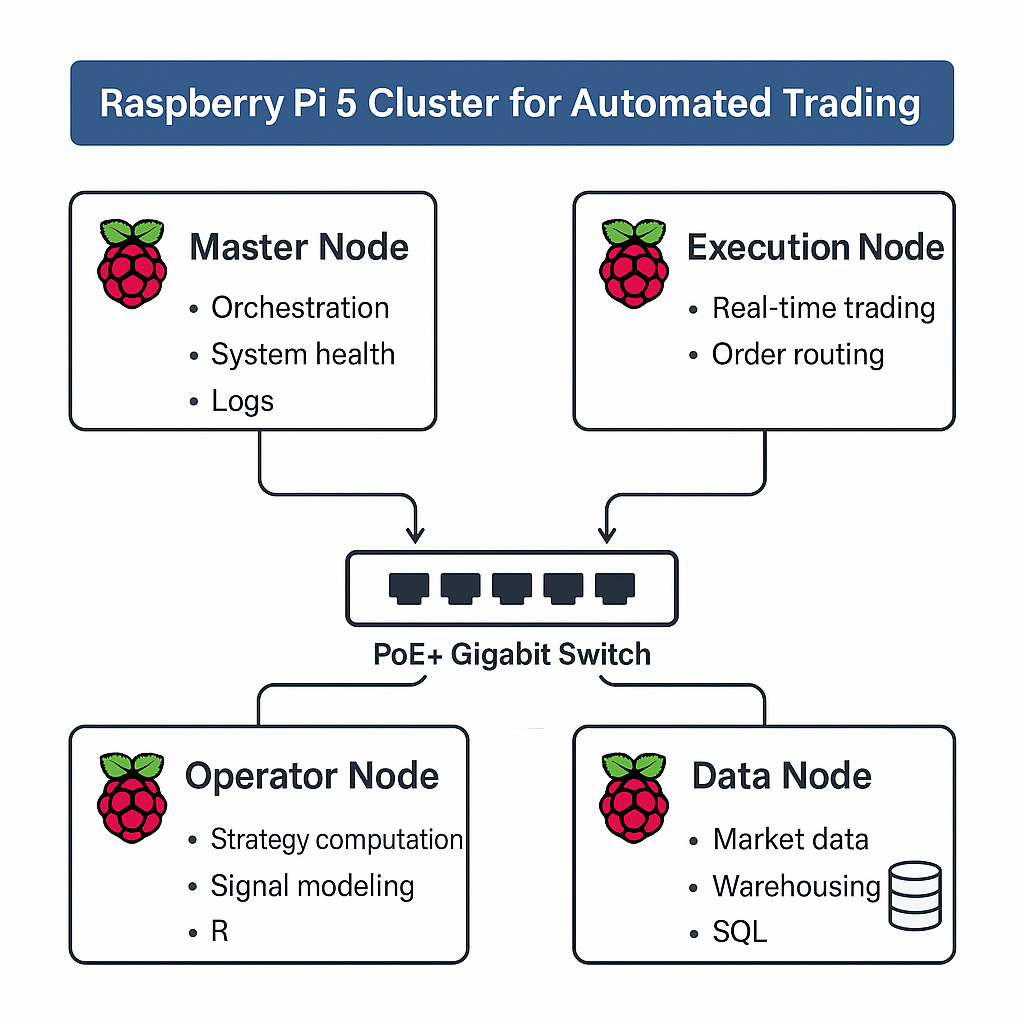

🧠 System Overview

This is a modular 4-node cluster built for full-stack trading operations:

- Master Node – Orchestration and system health

- Execution Node – Real-time trading and order routing

- Operator Node – Strategy computation and signal modeling

- Data Node – Market data scraping, warehousing, and archival

All nodes are connected via a Gigabit PoE+ switch for high-speed, full-duplex communication and centralized power.

🧱 Hardware Requirements

| Component | Details |

|---|---|

| Raspberry Pi 5 (x4) | 16GB RAM, active cooling, PoE+ support |

| PoE+ Gigabit Switch | Netgear GS305P, TP-Link TL-SG1005P, or UniFi Flex Mini |

| NVMe SSD | Mounted on the Data Node for warehousing/logging |

| Cooling | ICE Tower or 40mm fan per Pi, vertical stacking |

| Ethernet Cables | CAT6 or higher |

| PoE+ HATs | Official or 3rd-party with at least 25W output |

🖥️ Operating System

Use Ubuntu Server 22.04 LTS (ARM64) for all nodes:

- Headless operation

- Stable, lightweight, scriptable

- Easy to control via SSH and

systemd

🧩 Node Setup & Configuration

1. Basic Flash and Install

Use Raspberry Pi Imager to flash Ubuntu Server.

Enable SSH on boot by creating an empty ssh file in the boot partition.

# Create static hostnames

sudo hostnamectl set-hostname pi-master

# Also apply to pi-execute, pi-operator, pi-data respectively

Use nmtui or Netplan to assign static IPs if your router doesn’t support DHCP reservations.

2. Inter-Node SSH Setup (from pi-master)

ssh-keygen

ssh-copy-id pi@pi-execute.local

ssh-copy-id pi@pi-operator.local

ssh-copy-id pi@pi-data.local

3. Package Install

Install on all nodes:

sudo apt update && sudo apt install -y git build-essential cmake make rsync openssh-server htop tmux ufw fail2ban

4. R Environment (on pi-operator)

sudo apt install -y r-base r-cran-tidyverse r-cran-forecast r-cran-rcpp

5. C++ Trading Engine (on pi-execute)

Example build process:

git clone https://github.com/yourrepo/trading-bot.git

cd trading-bot

mkdir build && cd build

cmake ..

make -j4

./trade_bot --live

6. Data Scripts (on pi-data)

Example market data collection with Python:

sudo apt install -y python3 python3-pip sqlite3

pip install requests pandas

# data_collector.py

import requests, sqlite3, time

conn = sqlite3.connect('market.db')

while True:

price = requests.get('https://api.binance.com/api/v3/ticker/price?symbol=BTCUSDT').json()['price']

conn.execute("INSERT INTO prices VALUES (datetime('now'), ?)", (price,))

conn.commit()

time.sleep(60)

Schedule with cron or systemd.

🔁 System Roles and Long-Term Operation

🧠 Master Node (Orchestration)

- Deploys updates with rsync or Git

- Monitors temperature and load

- Pulls logs nightly

- Schedules all cron jobs

# temp_monitor.sh

TEMP=$(vcgencmd measure_temp | egrep -o '[0-9]+\\.[0-9]+')

if (( $(echo "$TEMP > 75" | bc -l) )); then

echo "Temperature critical: $TEMP°C" | mail -s "Pi Overheating" user@domain.com

fi

⚙️ Operator Node (R-based Modeling)

- Runs model fitting scripts, e.g. ARIMA, LSTM

- Outputs signals to shared location (e.g.,

/opt/signals/)

# strategy.R

library(forecast)

data <- read.csv('btc.csv')

fit <- auto.arima(data$price)

forecast <- predict(fit, n.ahead=1)

write.csv(forecast, file='/opt/signals/next_signal.csv')

🚀 Execution Node (C++ Engine)

- Monitors

/opt/signals/for new instructions - Submits orders via broker API (Alpaca, IBKR, etc.)

- Logs confirmations and errors

inotifywait -m /opt/signals/ -e create | while read; do

./trade_bot --execute /opt/signals/next_signal.csv

done

🗃️ Data Node (SQL + Logs)

- Stores long-term logs and strategy performance

- Backup job every night

rsync -az /opt/logs/ pi-master:/backups/logs/

🧠 Decision Making & Optimization

- Adjust model frequency (hourly, daily) based on observed volatility

- Add alerts using Telegram bots, mailx, or Slack webhooks

- Rebalance cluster roles as load increases — scale to 5+ nodes easily

- Periodically test failover scripts and service watchdogs

✅ Conclusion

This system enables you to:

- Run a fully independent, hardware-based quant desk

- Execute, monitor, and research from anywhere

- Maintain performance and safety over time

- Expand modularly as needs grow

Own your algorithm. Own your stack. Own your outcome.